I don’t come from a long line of distinguished scientists or researchers like the Frankenstein’s (pronounced Fronk N Steen). My lineage is made up of mostly civil servants, teachers and some mechanical engineers. However, I know how to distinguish between abnormal (pronounced Abbey Normal) and normal when conducting a product evaluation. I also know that my evaluation is only as good as the criteria I have set forth to measure it with and the process by which I will conduct the evaluation.

With that in mind here are some suggestions for how to properly evaluate a wireless solution that you would want to deploy in your school or business.

*note: these suggestions are made for those with limited budgets who lack the lab environments or high end enterprise testing software to do detailed, in depth spectrum analysis, packet analysis and capacity loading of wireless network solutions.

1. Establish which devices you will test with

This should mimic what you expect the users to be using as close as possible. Each type of device has performance characteristics that impact the performance of the wireless network. Choose a demographic of what the environment will most likely be and test with that. Here is what a demographic for a wireless network might look like; 50% laptops (802.11n 2x2), 25% tablets (802.11n 1x1), 25% mobile phones (802.11n 1x1). Then stay consistent and use the same device mix across the solutions during the evaluation. Keep in mind that the type of devices you use for testing will impact air time utilization of the network. Single stream (1x1) devices will take up more air time than dual or three stream devices will to do the same thing. An iPad will generally use up to 3x the amount of air time than a laptop with a three stream (3x3) chipset.

2. Identify the applications you will test with

Again, this should mimic the real world as much as possible. Will it be a teaching application, a video, a medical application, etc…?

Stream a video from a local server using Plex on a local wired desktop and VLC streamer on the iPad tablet. Use Handbrake to prepare a video file using the iPad profile. Load the file up with the Plex application on a wired desktop and then use VLC streamer on the iPad to watch the file. You can stream to multiple clients this way if you want to load stress the evaluation.

You can also use some bandwidth generating test applications like iPerf/jPerf. We use iPerf3 and WiFiPerf from Access Agility to do throughput testing on iOS devices. You can also use iPerf3 in server mode on a wired desktop and run iPerf3 on a wireless client running in client mode to do throughput testing on laptops.

In house at SecurEdge Networks we use an assessment application from Ixia that runs on the wireless devices and generates actual application traffic like voice, video and data and measures performance against industry established best practice SLA’s.

3. Setup your test environment

This is a critical piece of the evaluation because you need to control this environment as much as possible. Testing in an environment with lots of RF interference is not a level playing field for the solutions you are evaluating. RF environments are dynamic and change all the time from minute to minute and hour to hour. Minimize potential disruptions to the testing.

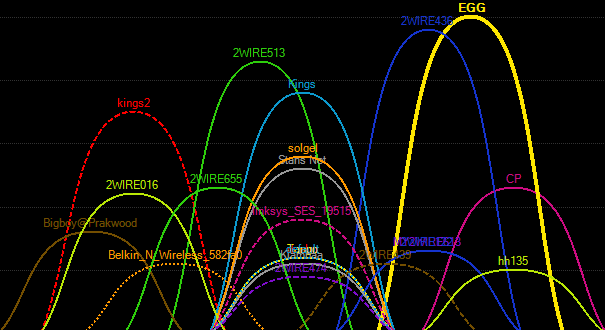

- Utilize tools to look at the RF spectrum and see what is happening and choose a place where there is the least amount of activity. Use Metageek’s InSSIDer tool to view channel use in both spectrums.

Testing in this environment would be disastrous!

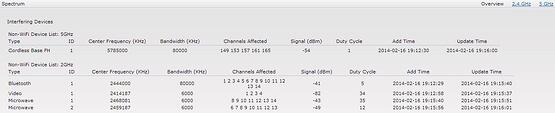

- Use the products you are evaluating for spectrum analysis. Most vendors now include spectrum analysis in their solutions. For instance, if you are evaluating vendor A’s wireless access points use vendor B’s access point in spectrum analysis mode to monitor the air. The vendor’s hardware won’t be interfering with each other since one is in a monitoring only mode. Switch modes then when evaluating the other vendor’s product. Spectrum analysis will tell you if microwave ovens, blue tooth devices, video transmitters or other non-Wi-Fi devices are interfering with your testing.

Between the Bluetooth device and the microwave oven the 2.4GHz spectrum is looking spent!

- Eliminate the network as a variable. Put the access points on their own subnet or network segment if possible. You don’t want a broadcast flood or something else coming from the wired network interfering with the evaluation.

- Mount the access points in their optimal positioning. If they are intended to be mounted on the ceiling because their antenna pattern is a down-tilt Omni directional then placing it face up on a table is not a valid way to test it.

- Use the same cable, switch and switch port to connect the access points. Eliminate the wired network hardware as a variable in the evaluation.

- If possible shut off your current WLAN solution during the evaluation to eliminate the systems from interfering with each other.

4. Involve an engineer from the vendor you are evaluating

Because every environment is different the solution needs to be optimized for it. Right out of the box the access points are set at defaults, are not optimized for your particular environment and most likely have older shipping firmware installed on them. Product defaults are also going to be different from vendor to vendor so make sure comparisons are apple to apples.

Get the vendor’s engineer involved and let them know what you would like to do. Have the engineer make recommendations on configuration of the access point or ask them to walk you through it. This is a great way to learn the features of the product and to get it set just right for your tests. Discuss with them how the access point should be properly mounted or oriented for optimal performance.

Here is a case in point where a third party independent evaluation was done on hardware and the products that did really well had vendor engineers involved. The products that didn’t shine like they normally do were used with default settings and default firmware.

5. What not to do

Here is what we have seen customers do as part of their evaluation process and we do not recommend it for the following reasons.

- Do not test applications from the Internet. This method introduces yet another variable into the evaluation equation. A hiccup from your ISP could cause video streams to stutter or someone downloading a large file in another part of the network could cause latency for the application you are testing. Keep the applications local on your internal network. If possible separate the test network from your production network to avoid issues with broadcast storms.

- Do not use signal range as a criteria for evaluation. This is a poor metric for evaluating performance on a couple of levels. One point is that unless you are diligent and turn off automatic RF management on the solution the power levels may be going up and down due to spectrum events. You would need to go into the solution, turn this off and set the access points to a static (i.e. 12dB) transmit level the same on both. The other point is that in the real world you would not want to have a device so far away from the access point that it could not transmit back to it. Wireless devices (tablets, phones, laptops) and access points do not have the same transmit capabilities. Access points have 100mw transmitters while a mobile device might have a 10 to 20mw transmitter. Their transmit capabilities are asymmetrical and if left this way can cause “near – far” issues on your network when deployed this way.

- Do not test in a live environment without turning off IDS/IPS features of your current WLAN. The IDS/IPS modules on current WLAN products will identify your “test” environment as unauthorized and the access points as rogues and it will attempt to mitigate the “threat”. The mitigation techniques can and will disrupt your testing. Make sure you turn this feature off or manually authorize the test access points as valid devices.

Making an investment in a WLAN solution is not a matter to be taken lightly. I know you know this because you are here researching how to properly evaluate such a solution. Since you have a vested interest in doing it correctly make sure that you set up your criteria and evaluation to accurately determine the best product for your needs. I hope this blog is useful to you and has helped point you in the right direction for your evaluation. Good Luck!